Recently, there was a new type of transistor architecture announced: RibbonFET, which is a rearrangement of the transistor gates and channels in such a way that the gate and channel can be more continuously “sized”, thus allowing the user to choose the size configuration that optimizes power and efficiency.

It essentially consists of “ribbons” of silicon stacked going through and surrounded by the gate. Since each ribbon is planar, it allows continuous sizing, in contrast to the current industry standard, FinFET, which is quantized, but with similar performance characteristics.

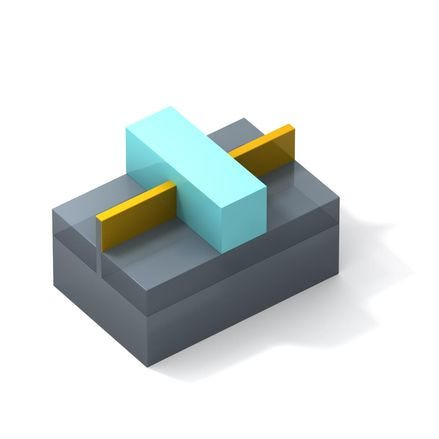

FinFET is where you have a “fin” of a channel stick out of the surface and be covered by the gate, which is more efficient in carrying charge, but has the disadvantage that you can’t continuously size the fin - only add more fins - which leads to a decrease in efficiency.

But this is just the start of their stacking ambitions. They’ve worked on and shown a 3D gate: They stacked a NFET and PFET transistor on top of each other to create an inverter using half the area with comparable electrical characteristics to a normal inverter. This could mean that Moore’s law could still be disproved - there could be a doubling or more of speed as more transistors are fit into a single wafer, and so the relentless march of compute will not stop. It’s exciting.

There’s also work on different types of transistors. IBM has been working recently modifying “phase change materials” to simulate synapse-like electrical activity to support neuromorphic(brain-like) algorithms for detecting objects using less area and less power. They’ve used the short-term electrostatic volatility and long-term non-volatility of phase change to simulate the short term electrical volatility and long term plasticity of synapses to do practical tasks. It promises to be able to perform AI like tasks with less space and less power, making AI more and more available to everyone.

Along with these stacked transistors, all kinds of companies are developing newer and newer neural network accelerators and other new forms of chip architectures. IBM, not content with developing 2nm transistors and the above neuromorphic networks, have released their own AI chip, for making neural network inference and training faster. It’s quite similar to Google’s old TPU in its matrix multiply networks, but uses interesting bit formats to take advantage of AI’s need for only “approximate” correctness - an interesting topic in of itself, since it is a not widely known fact that you can generally reduce the resolution of neural network parameters from 32 bit floats to 8 bit ints without losing too much accuracy for a 4 fold decrease in space, indicating the fundamental robustness and also overparameterization of most AI models.

Beyond TPU and similar matrix multiply chips, there are also various accelerators for speeding up specific neural network operations. Using tile-flexible and other paradigms, researchers have designed accelerators which can speedily run sparse models, models which can be created by methods like Learning Rate Rewinding. These sparse models promise a 10 times speedup over dense models in less space to boot - the dream is to one day be able to run GPT-3 or Stable Diffusion on a $20 chip, a dream which might become a reality soon(I’m hoping to be able to figure that out soon). There’s even work on Fourier transform based models of convolutional neural networks(for of course, convolution in time is multiplication in frequency) for even larger speedups.

In addition, there are new chip architecture paradigms which are being developed. My lab, the AVLSI lab at Yale specializes in asynchronous architecture, which is a specific type of chip architecture which has no clock for synchronization: only local synchronization between cells(which is what you call combination of gates). Since then each component can run as fast as it can, and there’s no global effects due to the absence of a clock, it promises higher speed, efficiency and easier debugging. In addition it can support ultra low power applications, as there’s no need for a power intensive clock. The only issue is that it’s not a paradigm supported by most chip design tools, so the lab has had to develop a whole suite of tools to support it, ACT. But it’s a growing field.

So out of the weeds of scientific description, what all this leads towards is a new and exciting world of ever smaller, ever more efficient, ever cheaper chips for running massively complex programs. The end of Moore’s law is premature, and in the future we could have more and more compute everywhere. In 2084, the AI models which will saturate the world around us won’t run on a massive supercomputer like Skynet, but rather will be run in small compact devices, and be embedded in chips in every device and computer, small chips which will be able to run massive models with minimum power consumption. It’ll be a decentralized revolution to a large degree. There will be supercomputers of highly advanced AI chips which will run massive models, but on a day to day basis, you will interact with the smaller models run on small cheap chips.