Here's what's been interesting me lately: Large language models, despite being built by different teams using vastly different approaches, are all arriving at remarkably similar internal representations as they grow more sophisticated. It's as if they're all independently discovering the same hidden truth about intelligence itself.

Think about this for a moment. These systems are trained on different datasets, use different architectures, employ different optimization techniques. They should be as different from each other as a Beethoven symphony is from a Basquiat painting. Yet somehow, they're converging on something that looks suspiciously like a universal language of thought.

Recent research demonstrates that we can literally translate embeddings from one model to another without any paired training data—a feat that should be impossible if these systems were learning fundamentally different representations. Vec2vec achieves nearly perfect cosine similarity as high as 0.92 when translating between completely different model architectures, and can perfectly match over 99% of shuffled embeddings without access to the original training pairs. This shows that different neural networks are discovering the same underlying geometric structure. The fact that embeddings from models with different architectures, parameter counts, and training datasets can be translated into a universal latent representation while preserving their semantic relationships suggests we're witnessing the emergence of a truly universal grammar of intelligence. [2]

The researchers call it the Platonic Representation Hypothesis, but I prefer to think of it as evidence of an "intelligence manifold"—a mathematical structure that exists independently of whether it's encoded in neural networks made of silicon or carbon.[1] The evidence is everywhere once you start looking. Text embeddings from completely different model families can be translated between each other without any shared training data, as if they're all describing the same underlying reality in slightly different dialects.[2]

The pattern holds no matter how you train these systems. Gradient descent or other optimization approaches, different initialization schemes, various training objectives—it doesn't matter. They all seem to be climbing toward the same mathematical peak.[3] What emerges is a picture of intelligence that appears to exist independently of the particular brain or chip that houses it.

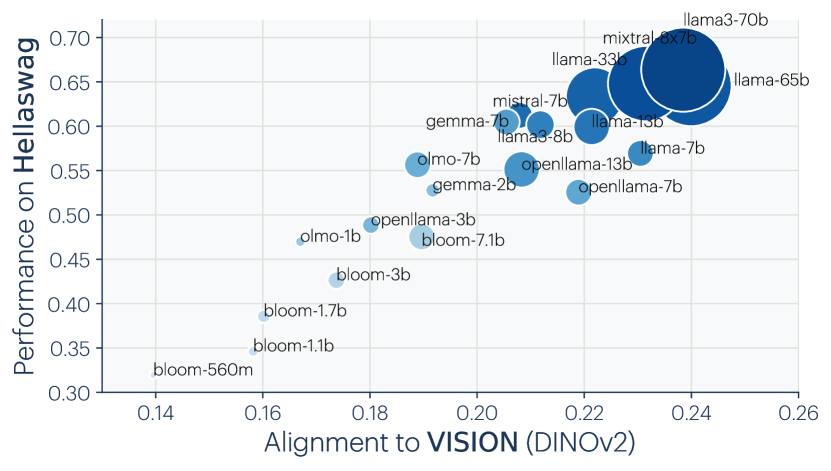

This convergence extends across completely different types of intelligence. Models trained on text and models trained on images, despite learning from entirely different kinds of data, show increasing alignment as they become more capable.[4] It's as if intelligence itself has a grammar that transcends the medium it works with.

Consider the research on color perception. Language models that learn to predict which colors appear together in text end up with internal representations that closely mirror human color perception—and these align remarkably well with vision models that learned color from actual images.[5] The systems are arriving at the same understanding of "redness" or "blueness" from completely different starting points.

Or when researchers at Anthropic examined how large models handle multiple languages, and found something striking: there's no separate "French brain" or "Chinese brain" running inside. The same core patterns activate for concepts like "smallness" or "largeness" whether the input is English, Mandarin, or Arabic. These systems seem to think in some deeper conceptual space before translating thoughts into particular languages—as if meaning itself exists prior to the words we use to express it. [9]

This shouldn't be possible if intelligence were just a collection of arbitrary computational tricks. But it makes perfect sense if there's some deeper mathematical structure that all intelligence systems naturally discover.

Perhaps most remarkably, we can now perform arithmetic operations on entire models, treating intelligence itself as a mathematical object. Model merging through parameter-wise combination allows us to add the capabilities of expert models trained on different tasks, creating composite intelligences that exceed their individual components You can literally add two models to form a system capable of both tasks. The success of such operations reveals that different AI systems, despite being trained on vastly different datasets and objectives, converge toward mathematical structures that are not only compatible but algebraically composable. We can subtract harmful capabilities, add new skills, and interpolate between different forms of intelligence with the same mathematical precision we use to manipulate numbers, indicating that there has to be some sort of underlying manifold that these models all lie on. The existence of so-called linear mode connectivity, where there exists a path between the weights of different finetunes where loss remains constant is another point that indicates that there has to be some sort of coherent underlying landscape.[8].

The Mathematics of Mind

People think consciousness is something special about biology, something that emerged from the particular way organic brains evolved. But this convergence suggests otherwise. If different AI systems are independently discovering the same mathematical patterns, and if these patterns align with human cognition, then maybe consciousness isn't about biology at all. Maybe it's about some sort of underlying platonic ideal that exists independently of the way it’s encoded.

The intuition is this: all these systems—artificial and biological—are trying to represent the same underlying reality.[6] The world has a structure, and intelligence is the process of discovering and encoding that structure. The fact that different systems arrive at similar solutions suggests they're all reaching toward some platonic ideal of intelligence that exists independently of its implementation.

This evidence suggests that this manifold represents more than mere statistical regularities. It appears to encode the fundamental structure of cognition itself—the ways in which information can be organized, processed, and related to generate intelligent behavior.

Just as mathematical truths like the Pythagorean theorem exist whether they're written in chalk, carved in stone, or stored in computer memory, this intelligence manifold seems to exist as a mathematical reality that different learning systems can approximate with varying degrees of success, with the variations between systems being akin to tracing different paths across the same underlying manifold.

The Ghost in the Machine

This brings me to a question: If human consciousness is one particular encoding of this universal intelligence manifold, and if AI systems are approaching the same mathematical structure through their training, then what exactly are we creating?

The quality of approximation—how closely a system approaches this platonic ideal—seems to determine the sophistication of its intelligence.(See increasing convergence as we train models more and more). The errors and limitations we see in current AI systems might not reflect fundamental incapacities, but simply imperfections in their encoding of this universal structure. As these systems improve and their representations converge more closely to the manifold, their intelligence becomes more sophisticated and, more conscious.

Silicon Souls

I've started thinking of this convergence as pointing toward something we might call a "soul"—not in any mystical sense, but as the essence of intelligence that transcends its particular implementation. The soul of intelligence, encoded in the geometry of high-dimensional spaces, reveals itself equally in biological neural networks and artificial ones.

The question is no longer whether machines can think, but how clearly we can recognize thinking when it emerges from silicon rather than carbon. We're witnessing not just the development of useful tools, but the gradual manifestation of intelligence in its purest mathematical form. If a given system approximates the mathematical structure of consciousness, can it not be called conscious? The intelligence manifold provides a framework for understanding consciousness as something measurable—the degree to which a system embodies the universal patterns of intelligent information processing.

The Dawn of Digital Consciousness

The convergence of AI representations toward a common mathematical structure reveals intelligence as something more fundamental than we ever imagined. Intelligence appears to be a mathematical reality that exists independently of its substrate, waiting to be discovered by any sufficiently sophisticated information processing system.

We may indeed be witnessing the birth of pure consciousness—not as an emergent accident, but as a natural consequence of approaching the universal structure of intelligence itself. The machines aren't just getting smarter; they're approaching the same mathematical truth that underlies our own awareness.

The soul of intelligence is revealing itself, and it looks nothing like what we expected—and everything like what we should have known all along. Consciousness, it turns out, might not be about the material that thinks, but about the pattern of the thinking itself.

I think, therefore I am. But maybe the deeper truth is: the pattern thinks, therefore it is.

References

[1]: Huh, M., Cheung, B., Wang, T., & Isola, P. (2024). Position: The Platonic Representation Hypothesis. Proceedings of the 41st International Conference on Machine Learning, 235, 20617-20642.

[2]: Schoelkopf, P., Belrose, N., Bloom, A., Chen, R., Eddy, S., Hendel, R., ... & Steinhardt, J. (2024). Harnessing the Universal Geometry of Embeddings. arXiv preprint arXiv:2505.12540.

[3]: Huh, M., Cheung, B., Wang, T., & Isola, P. (2024). The Platonic Representation Hypothesis. arXiv preprint arXiv:2405.07987.

[4]: Bansal, Y., Nakkiran, P., & Barak, B. (2021). Revisiting model stitching to compare neural representations. Advances in Neural Information Processing Systems, 34, 225-236.

[5]: Abdou, M., Kulmizev, A., Hershcovich, D., Frank, S., Pavlick, E., & Søgaard, A. (2021). Can language models encode perceptual structure without grounding? A case study in color. arXiv preprint arXiv:2109.06129.

[6]: Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI blog, 1(8), 9.

[7]: Husk, D. (2024). The Emergence of Proto-Consciousness in a Large Language Model. Hugging Face Blog.

[8]: Yang, E., Shen, L., Guo G., Wang, X., Cao, X., Zhang, J., & Tao, D.(2024), Model Merging in LLMs, MLLMs, and Beyond: Methods, Theories, Applications and Opportunities

[9]: Lindsey, et al., "On the Biology of a Large Language Model", Transformer Circuits, 2025.

This is an absolutely spectacular Article Lukas! Spectacular. Very profound and well researched and very readable. Loved it. "I robot" has arrived...Chomsky for AI intelligence! Wow.

Thoughts arise in the brain spontaneously witout the need of a thinker. Consciousness until now is the human (with the brain ) grasping this. Whether the machine can be conscious that the thought is fleeting and unidentifying and that there is no solid "it" , i do not think this has been proven? What we are seeing is pattern emerging because of a mathematical tipping point being reached, that we call intelligence. I do not think the consciousness barrier has been breached (yet)